Hello everyone

My team has recently made a number of large-scale environments for the bidding of some projects, including the whole beverage factory, docks, etc., and it is not going well in the VC, and the problem we often face is “this damn model data is really too big”, we have to spend a lot of energy to retouch the triangle to reduce the file size, most of the time, we can reduce it to 1/3 of the original file, at the cost of this takes two or three working days, the above is our current dilemma.

Recently, my boss told me to contact Siemens’ Visualization Mockup, he said that Visualization Mockup can provide an Ultra Light level model, my confusion about this is, no matter what the model is, as long as it is still in the VC operation, the final save and packaging is still carried out by the VC, I don’t think an Ultra Light model can solve the problem. But my boss is confident about it.

I don’t know what the community thinks about this, but we always encounter customers comparing VC to PDPS, because VC is not as easy as PDPS to work on the entire production line, and this comparison is inevitable. If I switch to the Ultra Light model, can I make the VC environment easier? If so, how do you get the Ultra Light model?

Thank you for hearing my complaints, although I’m not sure if this post will go as ignored as the previous ones.

Have a great weekend to you all!

I don’t know anything about Siemens technology but I wonder about your use case in VC.

I understood you have got some massive CAD assembly of whole buildings / area with down to nuts and bolts detail from somewhere and then what?

Why do you need to have all that geometry in VC? So you can build your actual simulation layout inside it and then render some nice video?

I suspect that the actual model is not necessary for making or running your VC simulation. You only really need a floor plan and maybe some boxes to represent areas which are not accessible.

Then when it comes to visualizing the whole thing together for a video, I think you could get your CAD model into Blender or some other 3D visualization software somehow and merge it with 3D animation recorded from VC (FBX export, Blender add-on) to create your combined model for nice visuals.

I think this kind of use case is also what the NVIDIA omniverse connector is for. You can have a massive model on the omniverse side for rendering, and all the actual simulation-based animation is just a simple model coming from VC.

Thank you for your reply,

First, regarding your concerns, I feel I need to clarify. In fact, we don’t include all the details of the entire building; we only include the parts related to the production line. For example, my upstream (usually my client) will directly provide me with a step-format BIW from CATIA. This model may have millions of triangles, and after saving it as a vcmx file, it could be around 50-100MB. Through various methods, such as decimation, voxelization, and collapsing, we can eventually optimize it to around 100,000 triangles (these numbers might not be exact). This number is almost similar to the BIW that comes with VC, but unfortunately, when performing simulations, VC still experiences lag. This is frustrating because it’s not even a larger environment, just a single workstation.

As for video production, it does run more smoothly. The environment exported from VC to Blender doesn’t experience lag (my specs are i5 14400f, 4060ti, 32GB), but the issue is that I first need to set up the logic in VC before exporting it, right? The pessimistic part is that, given the current environment I am in (I’m not sure if this translation is accurate), relying solely on animation videos is unlikely to advance the bidding process. People want to see the interaction between the simulated environment and reality, including signal transitions, event triggers, and real-time statistical data. This gives them a sense of security, making them feel they’re not being deceived. So, many times, we end up directly showing the simulated environment in VC, which is also a challenge.

Wow, I feel like I’ve said a lot (sorry, I’m typing this on my phone). Thank you again for your reply. I know that this issue may not have a solution in the short term, but at least it hasn’t disappeared like previous issues.

Lastly, once again, thank you, and I sincerely appreciate it. Have a great weekend!

- BAD

OK, so it is not just about “static scenery” geometry, but you have complex models more or less relevant to the simulation.

The thing is, just rendering and moving around meshes with up to millions of triangles shouldn’t really be a problem in VC. The performance issues arise when the meshes are copied, components rebuilt, or in general any operations done which require iterating through every triangle.

So to retain usable performance with complex geometries you must ensure that no component rebuilds occur and that the geometries remain shared between instances of the same component. Using raycast sensors is also a problem, because depending on how your geometry is organized it may need to calculate intersection with every triangle. Ray casting does a fast lookup using uses bounding boxes first, but then may need to check every triangle of a mesh if the ray hits the bounding box of the mesh.

Loading vcmx files with complex geometry is unfortunately always slow because VC always does a rebuild of every component and certain relatively heavy pre-calculations for each mesh.

Here is a kind of checklist for retaining simulation performance with complex geometry:

- Don’t create or change values of component properties. These may cause rebuild or copying of the geometries to make the component unique. Using compoonent properties in PM product types is generally problematic.

- Don’t use raycast sensors or raycasting from API. Also don’t use collision detection if not truly necessary.

- Don’t use the Material property at component level, as assigning a value to it can apparently make the component unique.

- Don’t modify the geometry (or feature tree) of components during simulation as that can cause rebuilds and copying. Including things like adding maybe welding visualization geometry or moving frames. Modifying the user geometry container in component should be fine, though.

- Consider organizing your component geometry to reasonable chunks and having those in separate geometry features or link nodes, or even separate components. I’m not sure if e.g. ray casting uses bounding boxes of geometry sets (probably does), but at least it will use the those bounds of simulation nodes. Changing visibility or material of simulation node is also a very lightweight change as it does not require rebuilding the geometry tree.

- Maybe don’t use complex geometry in PM product types in general. I’m not sure if creating a product instance causes a rebuild or copying of the gemetry and in exactly what cases that might occur. I think it shouldn’t do a rebuild unless component properties are assigned, but I’m not sure.

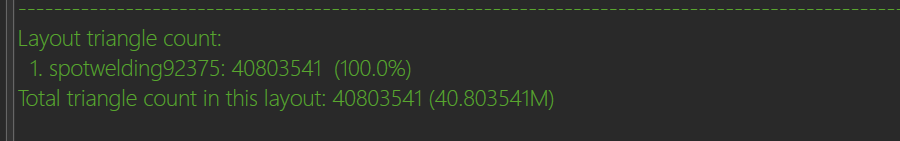

Yes, this is a problem, a single million-level model is indeed fine, but the car is only a small part of the production line, and a single station may have tens of millions of triangles:

There are nearly 20 production lines (assembly and welding lines) in one section of such stations.

My ray sensors all refresh above 0.2s, maybe I should give them a larger interval? However, you reminded me that the current large-scale projects of VC are basically made with the Process module, maybe the use of Process can make the simulation more lightweight?

Anyway, I’m going to try new things, and I’m going to continue to look for ways to make the model extremely lightweight.